Azure Data Factory

A managed service for orchestrating and managing diverse data, especially in hybrid environments where some data sources reside in the cloud and others are on-premise. Developers can use Data Factory to transform semi-structured, unstructured and structured data from on-premises and cloud sources into trusted information.This Services allows developers to compose data storage, movement, and processing services into streamlined data pipelines.

Insights

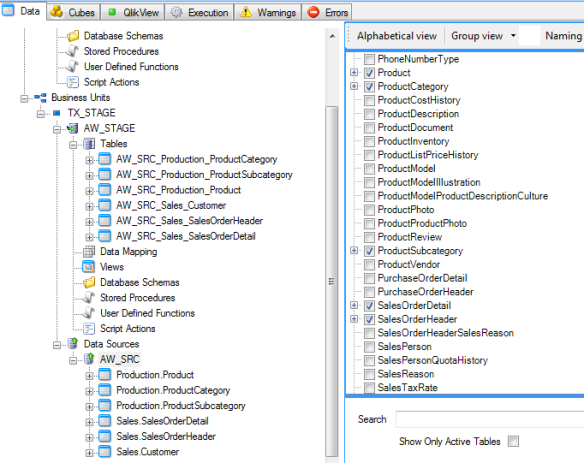

Data Analytics has begun to change its emphasis from the traditional data warehouse as it ‘s one true source for analytics to hybrid environments. Companies are looking for insights into all kinds of data from sensor data, to log files, and social media. There is still a need for data warehouses and they will be moved to the cloud in conjunction with Big data for better insights. Data Factory is the Azure ETL tool used to be able to manage these hybrid environments. The Azure Data Factory helps the business by providing a solution to the problem of hybrid environments and offer the plumbing necessary to help them exist together in the Cloud.

Data Factory Demo

I’m going to show the Azure Data Factory Preview interface and do a simple demonstration on how to move data from an On Premise SQL Server table to an Azure SQL Server table. We will see how to create a Data Gateway, Linked Services, Datasets, and Pipelines. The basic tools needed are the Azure Portal, Azure PowerShell, and a text editor. We will be creating objects in the Azure Portal and programming JSON files that will be run by PowerShell commands.

New Azure Portal

The site for the Azure Portal allows you to pin objects to the main screen or access them from a menu to the left. The icon with the building is a Data Factory.

Data Factory

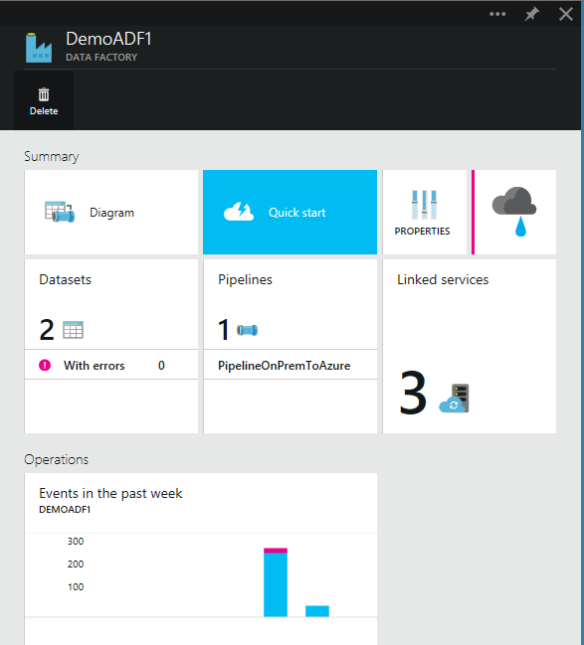

Once you click on the DemoADF1 (Data Factory) you will see it open to a section with more options. This is a simple data factory that well go through in detail. The data factory UI shows 4 important sections that we will cover today which are:

1. Linked services

2. Datasets

3. Pipelines

4. Diagram

Here is the PowerShell command to add a new data factory. We can set variables for the data factory and resource group and then add them to the command.

$df = "DemoADF1" $rg = "Default-SQL-EastUS" #New-AzureDataFactory -ResourceGroupName $rg -Name $df -Location "WestUS"

Linked services

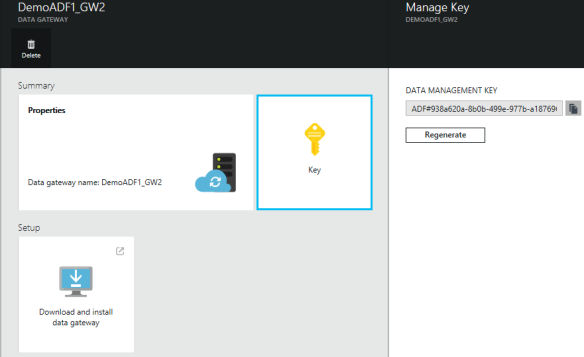

The first thing we want to set up is the Linked service by adding a Data Gateway to our On Premise machine. This will allow us to access the On Premise SQL Server instance in the Data Factory. Select Linked services and go to the Data dateway button at the top which will give you a New data gateway screen. Add the name and region for the gateway.

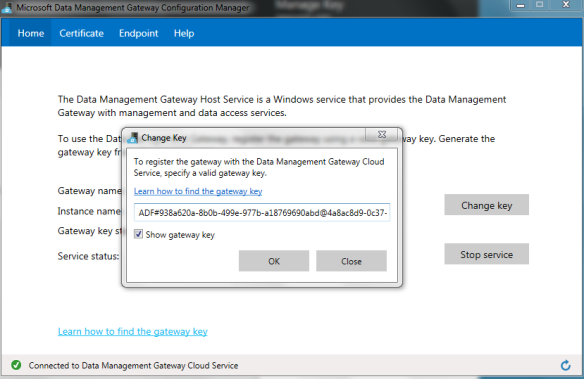

Configure and register the data gateway. Get the registered key and add to the on Premise Microsoft Data Gateway application on the local machine. You can install Microsoft Data Gateway application from this link: http://www.microsoft.com/en-us/download/details.aspx?id=39717

Add register key to the On Premise Microsoft Data Gateway.

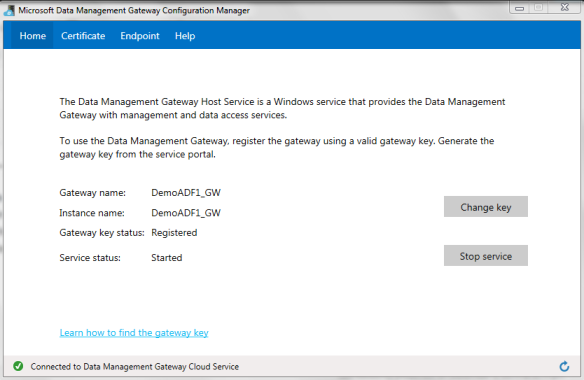

Once the key is registered you will see a registered status and the same name of the gateway will appear in the On Premise machine.

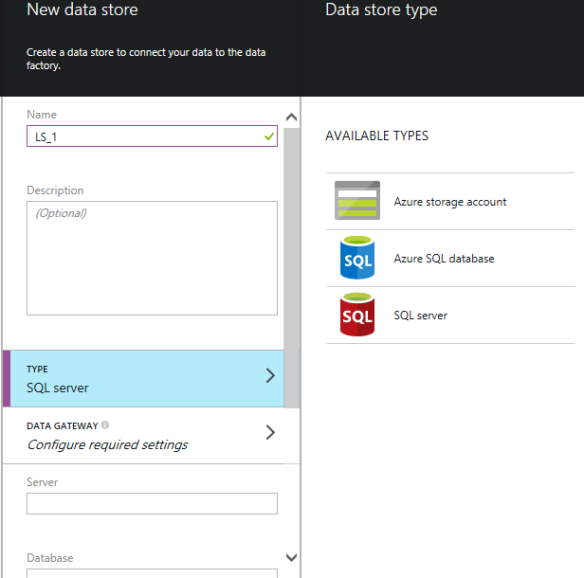

We then want to add a Data Store for this On Premise SQL Server which can be done through the Azure Portal or from a PowerShell script using a JSON file. I will show you both methods below.

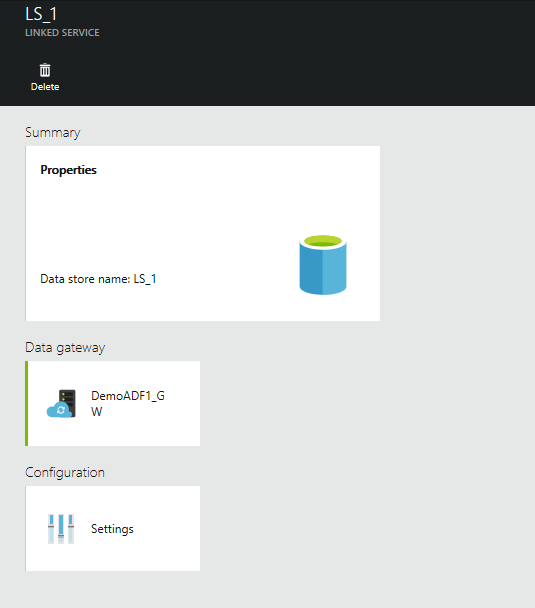

This is my On Premise SQL Server Linked service via Azure Portal setup.

Add the Data gateway to the On Premise SQL Server Linked Service.

Here is my On Premise SQL Server Linked Service via PowerShell setup. MyOnPremisesSQLDB.json file is created below to set the type, connection string, gatewayname and gatewaylocation.

{

"name": "MyOnPremisesSQLDB",

"properties":

{

"type": "OnPremisesSqlLinkedService",

"connectionString": "Data Source=MRNR-E6400-01\\JKSQLSERVER;Initial Catalog=AdventureWorks2012;Integrated Security=False;User ID=jesse;Password=******;",

"gatewayName": "DemoADF1_GW",

"gatewayLocation": "westus"

}

}

Then we execute the JSON script using Aure PowerShell. First we need to connect to the Azure Account using the add account script and login to azure.

Add-AzureAccount

We need to add in the Azure Data Factory cmdlets with the following command

switch-azuremode AzureResourceManager

Finally we can execute the New-AzureDataFactoryLinkedService which asks for the resource group name, data factory name, linked service, and link to the JSON file.

New-AzureDataFactoryLinkedService -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -Name "LS_1" -File "C:\\Powershell\\MyOnPremisesSQLDB.json" | Format-List

Next we can add the Azure SQL Server Linked service via Azure portal.

We can also add the Azure SQL Server Linked service via a JSON file and PowerShell. MySQLAzureDB.json file is created below and we need to set the AzureSqlLinkedService type and connection string.

{

"name": "MySQLAzureDB",

"properties":

{

"type": "AzureSqlLinkedService",

"connectionString": "Server=tcp:exv4gm2p9l.database.windows.net,1433;Database=MarinerDB;User ID=jessekraut@exv4gm2p9l;Password=Dask1995;Trusted_Connection=False;Encrypt=True;Connection Timeout=30"

}

}

Run the PowerShell script to create the linked service for the Azure SQL Server connection.

New-AzureDataFactoryLinkedService -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -Name "LS_2" -File "C:\\Powershell\\MySQLAzureDB.json" | Format-List

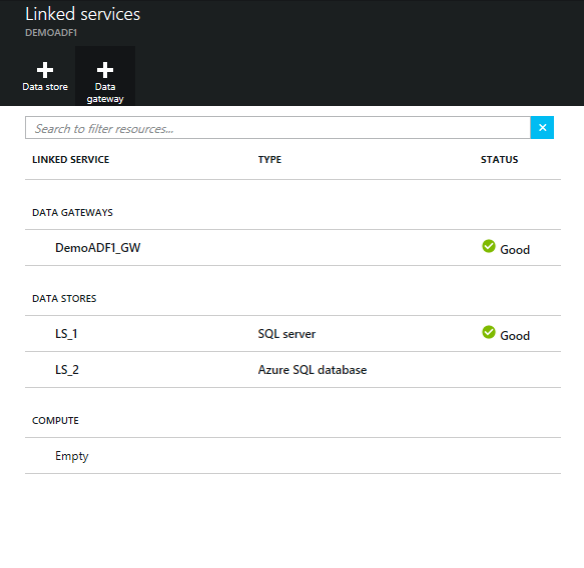

Once we add all the Linked services you see all of them in the Azure Portal. The Good status indicator or no indicator means the connections are correct.

Datasets

We now need to add source and destination tables to the data factory and this is done with JSON metadata files and PowerShell commands. First we want to add the On Premise SQL Server table to the Data Factory. Some important element which are basically the same for source and destination metadata. We have the name; the structure which is the fields and types; we have the location which is the type, table, and linked service. Lastly is the availability which his how often the data slices are run for and in this case its an hour.

{

"name": "MyOnPremisesSQLDB_Table",

"properties":

{

"structure":

[

{"name": "EmpId", "position": 0, "Type": "String"},

{"name": "FirstName", "position": 1, "Type": "String"},

{"name": "LastName", "position": 2, "Type": "String"},

{"name": "AddressName", "position": 3, "Type": "String"}

],

"location":

{

"type": "OnPremisesSqlServerTableLocation",

"tableName": "Employees",

"linkedServiceName": "LS_1"

},

"availability":

{

"frequency": "Hour",

"interval": 1,

"waitOnExternal":

{

"retryInterval": "00:01:00",

"retryTimeout": "00:10:00",

"maximumRetry": 3

}

}

}

}

Execute the PowerShell script to run the New-AzureDataFactoryTable.

New-AzureDataFactoryTable -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -File C:\Powershell\MyOnPremisesSQLDB_Table.json

Next we want to add the Azure SQL Server destination table and here is the JSON script.

{

"name": "MySQLAzureDB_Table",

"properties":

{

"structure":

[

{"name": "EmpId", "position": 0, "Type": "String"},

{"name": "FirstName", "position": 1, "Type": "String"},

{"name": "LastName", "position": 2, "Type": "String"},

{"name": "AddressName", "position": 3, "Type": "String"}

],

"location":

{

"type": "AzureSQLTableLocation",

"tableName": "Employees",

"linkedServiceName": "LS_2"

},

"availability":

{

"frequency": "Hour",

"interval": 1

}

}

}

Execute the Power Shell script to run the New-AzureDataFactoryTable.

New-AzureDataFactoryTable -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -File C:\Powershell\MySQLAzureDB_Table.json

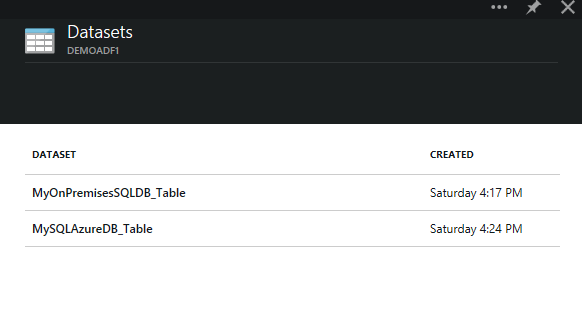

Once we execute both dataset scripts we can see them in the Azure portal under the Datasets section of the data factory.

Pipelines

We now need to create a pipeline which is the connection between the 2 tables where we create activities and transformations. We have name and properties to set and you will notice an activities section to set the type of pipeline activity. In this case we want to set this to be a CopyActivity and include both of the tables in the input and output section. We have an option to set a transformation but in this case we just list the source and destination objects.

{

"name": "PipelineOnPremToAzure",

"properties":

{

"description" : "This pipeline has one Copy activity that copies data from an on-prem SQL to Azure blob",

"activities":

[

{

"name": "CopyFromSQLtoAzure",

"description": "Copy data from on-prem SQL server to Azure",

"type": "CopyActivity",

"inputs": [ {"name": "MyOnPremisesSQLDB_Table"} ],

"outputs": [ {"name": "MySQLAzureDB_Table"} ],

"transformation":

{

"source":

{

"type": "SqlSource",

"sqlReaderQuery": "select * from dbo.Employees"

},

"sink":

{

"type": "SqlSink"

}

},

"Policy":

{

"concurrency": 1,

"executionPriorityOrder": "NewestFirst",

"style": "StartOfInterval",

"retry": 0,

"timeout": "01:00:00"

}

}

]

}

}

Execute the PowerShell script to run the pipeline JSON file.

New-AzureDataFactoryPipeline -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -File C:\Powershell\PipelineOnPremToAzure.json

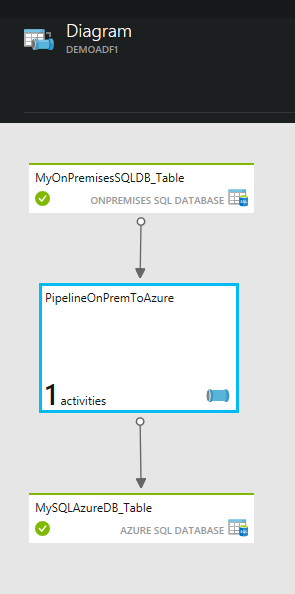

Once we run the PowerShell script we can see dataset and pipeline additions in the Diagram window.

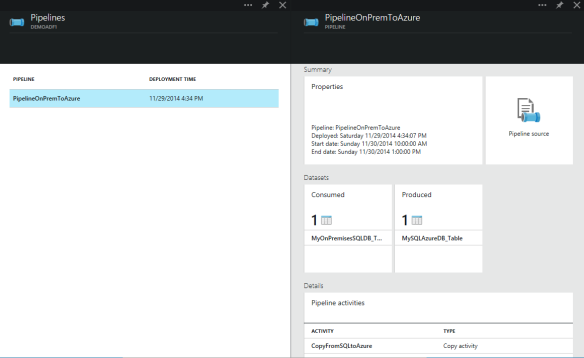

We can also see the pipeline properties by selecting the Pipelines link and drill into PipelineOnPremToAzure.

Our last step is to start the Pipeline Active Period to execute from a start and end time.

Set-AzureDataFactoryPipelineActivePeriod -ResourceGroupName "Default-SQL-EastUS" -DataFactoryName "DemoADF1" -StartDateTime "2014-11-30 3 PM" –EndDateTime "2014-11-30 6 PM" –Name PipelineOnPremToAzure

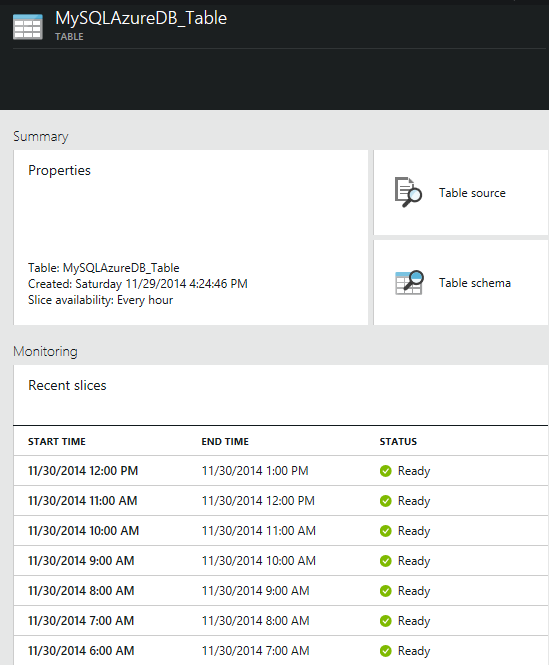

Data Factory Results

You can look in the Employees table in the Azure SQL Server to see that it copied data from the On Premise SQL Server table. Each time it runs there will be an entry with a Ready Status meaning it completed successfully. If it fails it will say Failed and you can click on it to see what the error is for that data slice.

You can access all the files for this demo at the following link AzureDataFactory1

Resources:

Azure Data Factory reference

http://blogs.technet.com/b/dataplatforminsider/archive/2014/10/30/the-ins-and-outs-of-azure-data-factory-orchestration-and-management-of-diverse-data.aspx

Azure Data Factory CmdLets

http://msdn.microsoft.com/en-us/library/dn820234.aspx?WT.mc_id=Blog_SQL_Announce_DI

Azure Linked Service code

http://msdn.microsoft.com/en-us/library/azure/dn834986.aspx

Videos

https://channel9.msdn.com/Shows/Data-Exposed/Introducing-Azure-Data-Factory

http://channel9.msdn.com/Events/TechEd/Europe/2014/DBI-B317

Tutorial

http://azure.microsoft.com/en-us/documentation/articles/data-factory-tutorial/

Tutorial Sample Files

https://github.com/Azure/Azure-DataFactory/tree/master/Samples/ADFWalkthrough

Stay tuned….Azure Data Factory with HDInsight.